Unmasking Clothoff: The Dark Side Of AI "Undressing" Technology

In the rapidly evolving landscape of artificial intelligence, where innovation promises to reshape industries and daily life, a darker, more insidious application has emerged: the AI "undressing" app known as Clothoff. This technology, which leverages advanced algorithms to digitally remove clothing from images, raises profound ethical, legal, and societal questions that demand our immediate attention. Far from being a harmless novelty, Clothoff represents a significant threat to privacy, consent, and the dignity of individuals, particularly women, becoming a stark example of AI's potential for misuse.

The existence and proliferation of such applications, as highlighted by investigations from reputable sources like The Guardian, underscore a critical challenge facing the digital age: how do we regulate technologies that blur the lines between reality and fabrication, especially when they facilitate non-consensual content? This article delves deep into the phenomenon of Clothoff, exploring its operational mechanics, the alarming scale of its reach, and the devastating implications for victims, all while advocating for a more responsible and ethical approach to AI development and usage.

Table of Contents

- Understanding Deepfakes and the Rise of AI Image Generation

- Clothoff: Unveiling the "Undressing" App

- The Alarming Scale and User Engagement of Clothoff

- The Ethical Abyss: Non-Consensual Deepfakes and Their Impact

- The Business of Exploitation: How "Undressing" Apps Thrive

- Community and Content Sharing: The Digital Ecosystem Around Clothoff

- Navigating the Digital Minefield: Protecting Yourself and Others

- The Future of AI: A Call for Responsibility and Ethical Innovation

Understanding Deepfakes and the Rise of AI Image Generation

The advent of artificial intelligence has brought about unprecedented capabilities in content creation, from generating realistic text to synthesizing images and videos. Among these advancements, "deepfake" technology stands out for its ability to manipulate or generate media that appears authentic but is, in fact, fabricated. Initially emerging as a niche application for humorous or artistic purposes, deepfake technology quickly evolved, with more sophisticated algorithms making it increasingly difficult to distinguish real from fake. AI image generation, a broader category, encompasses tools that can create images from text prompts or modify existing ones. While many of these tools, like Muah AI, offer legitimate and creative applications—such as generating unique artwork or enhancing photographs with "unbeatable speed"—the underlying technology can be repurposed for malicious ends. The core principle involves neural networks learning patterns from vast datasets to generate new data that mimics the original. When applied to human images, this power becomes a double-edged sword, capable of both groundbreaking creativity and profound harm. The ethical implications of such powerful tools are paramount, especially when they are used to create non-consensual content.Clothoff: Unveiling the "Undressing" App

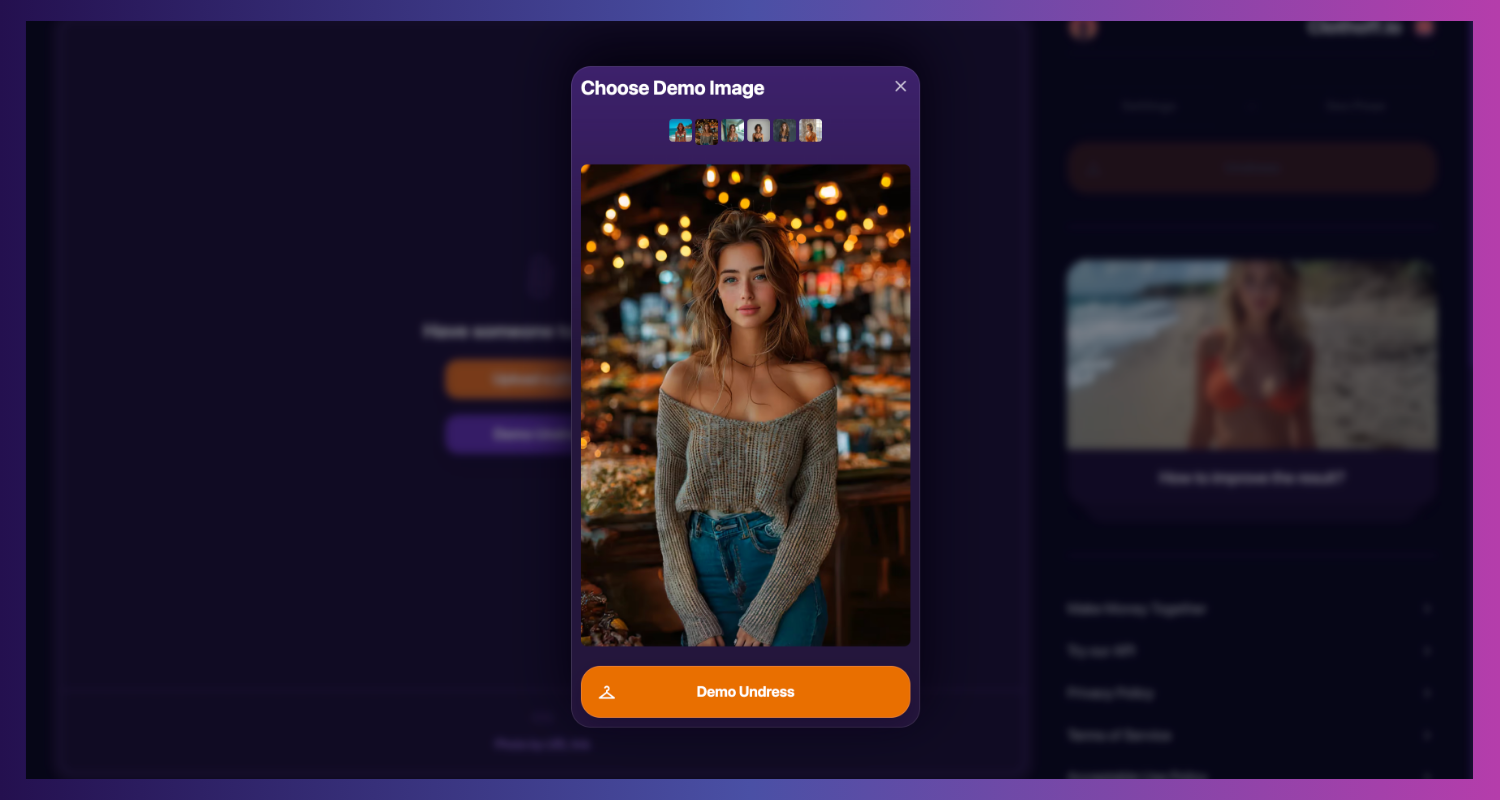

At the heart of our discussion is **Clothoff**, an application that has garnered significant notoriety for its specific and highly controversial function: digitally "undressing" individuals in photographs. The "Data Kalimat" explicitly labels it as a "deepfake pornography AI app," a chilling description that leaves no room for ambiguity about its nature. This app invites users to "undress" images, effectively creating non-consensual intimate imagery (NCII) through artificial intelligence. The operational premise of Clothoff is deceptively simple. Users upload an image, and the AI algorithm then processes it, generating a modified version where the subject appears nude or partially nude. This is achieved by superimposing synthetic skin and anatomy over the original clothing, making it appear as though the person was never clothed. The sophistication of these algorithms has improved over time, making the generated images increasingly convincing, blurring the line between reality and fabrication. Unlike ethical AI tools that focus on creative or productivity enhancements, Clothoff's design is inherently exploitative, built on the premise of violating personal privacy and dignity.The Alarming Scale and User Engagement of Clothoff

The reach and popularity of **Clothoff** are deeply concerning. According to excerpts from investigations, its website "receives more than 4m monthly visits." This staggering figure underscores the widespread demand for such illicit content and the ease with which this technology can be accessed. A user base of this magnitude translates into potentially millions of non-consensual images being generated and, in many cases, shared. The operators behind Clothoff have demonstrated a calculated approach to their operations. "In the year since the app was launched, the people running Clothoff have carefully" managed its presence, suggesting an awareness of the controversial nature of their service and a deliberate strategy to maintain its availability despite ethical and legal challenges. Their marketing approach, aiming to keep things "casual and fun, but crystal clear for everyone," attempts to normalize a deeply harmful activity, downplaying the severe implications for victims. The fact that they invite users to "shape the future of Clothoff with your votes" and value user "opinion as gold" indicates a robust feedback loop and community engagement, further solidifying its user base and guiding its development in a way that caters to its user's desires, regardless of ethical boundaries. This level of engagement creates a dangerous ecosystem where the creation and consumption of NCII are actively encouraged and refined.The Ethical Abyss: Non-Consensual Deepfakes and Their Impact

The core issue with **Clothoff** and similar applications lies squarely in the realm of ethics, specifically the egregious violation of consent. The very act of "undressing" someone without their knowledge or permission, even digitally, is a profound invasion of privacy and a form of sexual exploitation. It strips individuals of their autonomy and subjects them to a form of digital sexual assault. This is not merely a technical novelty; it is a tool that facilitates harm.The Psychological Devastation on Victims

The impact on victims of non-consensual deepfake pornography is severe and long-lasting. Imagine discovering that an intimate image of you, entirely fabricated, is circulating online. The psychological trauma can include: * **Profound humiliation and shame:** Victims often feel violated and exposed, leading to intense feelings of embarrassment. * **Anxiety and paranoia:** Constant fear that the images will resurface or be seen by friends, family, or employers. * **Depression and suicidal ideation:** The overwhelming emotional distress can lead to severe mental health issues. * **Reputational damage:** The fabricated images can destroy careers, relationships, and social standing, even when proven fake. * **Loss of control and agency:** Victims are stripped of their bodily autonomy and feel powerless against the spread of the images. As the "Data Kalimat" suggests, discussions around these issues, including those within feminist communities, highlight the deep societal implications and the need for robust responses to protect individuals from such harm. The emotional scars left by these digital violations are as real and debilitating as those from physical assault.The Legal Battleground Against Non-Consensual Intimate Imagery

In response to the proliferation of NCII, including deepfakes, many jurisdictions worldwide have begun to enact specific laws. These laws aim to criminalize the creation, distribution, and possession of such content. For instance, in the United States, several states have passed legislation making deepfake pornography illegal, and federal efforts are underway. Globally, countries are grappling with how to adapt existing laws on sexual exploitation, harassment, and privacy to address this new digital threat. However, the legal landscape is complex. Challenges include: * **Jurisdictional issues:** Deepfakes can be created anywhere and distributed globally, making it difficult to enforce laws across borders. * **Identification of perpetrators:** Anonymity online makes it hard to track down those responsible for creating and sharing the content. * **Defining "consent":** The very nature of deepfakes means consent is inherently absent, but legal frameworks need to be clear on this. * **Technological evolution:** Laws struggle to keep pace with the rapid advancements in AI technology. Despite these challenges, the legal fight against deepfake pornography is crucial. It sends a clear message that such acts are not tolerated and provides victims with avenues for justice and redress. The "Data Kalimat" mentioning a subreddit focused on "scams" might not directly relate to deepfake pornography, but it highlights the broader need for educational resources to protect individuals from digital exploitation and harm.The Business of Exploitation: How "Undressing" Apps Thrive

The alarming success of **Clothoff**, evidenced by its millions of monthly visits, points to a disturbing business model built on exploitation. While the app might offer a "free" tier, as some AI photo generation tools do, the sheer volume of traffic suggests monetization through advertising, premium features, or data collection. The allure of "absolutely free" services, as mentioned in the context of alternatives like Muah AI, can often mask hidden costs or ethical compromises. The operators of Clothoff capitalize on the demand for illicit content, creating a platform where users can easily generate and potentially share deepfake pornography. Their careful operation, as noted in the data, implies a sophisticated understanding of how to navigate legal gray areas and maintain a user base. This business model thrives on anonymity, the ease of access to technology, and a lack of robust enforcement mechanisms. The engagement strategies, such as soliciting user feedback and votes, suggest a community-driven approach that reinforces the app's development in line with user desires, further entrenching its problematic services. This creates a vicious cycle where demand fuels development, and development makes access easier, perpetuating the harm.Community and Content Sharing: The Digital Ecosystem Around Clothoff

The existence of dedicated online communities further illustrates the problematic ecosystem surrounding applications like **Clothoff**. The "Data Kalimat" specifically mentions "2 subscribers in the clothoff18 community, A place where you can post images that are modified by the site clothoff.io." While the subscriber count might seem small in this specific instance, it signifies the deliberate creation of spaces for sharing and consuming content generated by the app. The "18" in the subreddit name clearly indicates an adult-oriented and likely illicit nature. These communities, often found on platforms like Reddit, serve as hubs for users to: * **Share generated content:** Distributing the non-consensual images. * **Discuss techniques:** Exchanging tips on how to use the app effectively or create more "realistic" deepfakes. * **Request specific content:** Users might solicit others to create deepfakes of particular individuals. * **Foster a sense of belonging:** Normalizing the creation and consumption of NCII within a like-minded group. The presence of such communities amplifies the harm caused by the app itself. It moves beyond individual generation to widespread distribution, making it exponentially harder for victims to regain control over their digital identities and reputations. Platforms like Reddit, which boast about giving users "the best of the internet in one place," face a significant challenge in policing such harmful subreddits while upholding principles of free speech. The tension between platform responsibility and user autonomy is a constant battle in the fight against online abuse.Navigating the Digital Minefield: Protecting Yourself and Others

In an era where technologies like **Clothoff** exist, digital literacy and proactive measures are more critical than ever. Protecting oneself and others from the harms of deepfakes requires a multi-faceted approach, encompassing awareness, critical thinking, and knowledge of available resources.Identifying Deepfakes and Fabricated Content

While deepfake technology is becoming increasingly sophisticated, there are often subtle clues that can help in identifying fabricated content: * **Inconsistent lighting or shadows:** The lighting on the subject might not match the background. * **Unnatural blinking or eye movements:** Eyes might not blink naturally, or eye gaze might be off. * **Distorted facial features or skin texture:** Skin might appear too smooth or too grainy, or facial features might be slightly asymmetrical. * **Unusual body proportions or anomalies:** The generated body might not align perfectly with the head or limbs. * **Lack of natural hair or clothing details:** Hair might look like a wig, or clothing might have strange folds or textures. * **Audio inconsistencies (for video deepfakes):** Lip sync might be off, or the voice might sound robotic or unnatural. * **Contextual red flags:** If content seems too sensational or out of character for the individual, it warrants skepticism. It's crucial to approach all online content with a critical eye, especially images or videos that seem too good (or too bad) to be true.Reporting Mechanisms and Seeking Support

If you or someone you know becomes a victim of deepfake pornography or any form of NCII, immediate action is vital: * **Report to the platform:** Contact the platform where the content is hosted (e.g., social media, website, hosting provider) and report the content for violating their terms of service. Most platforms have policies against NCII. * **Contact law enforcement:** File a police report. Many jurisdictions now have specific laws against deepfake pornography. * **Seek legal counsel:** Consult with an attorney specializing in digital privacy or cybercrime to explore legal options. * **Utilize victim support organizations:** Organizations like the Cyber Civil Rights Initiative (CCRI) offer resources, support, and guidance for victims of NCII. * **Document everything:** Keep records of the content, where it was posted, and any communication with platforms or law enforcement. The "Data Kalimat" mentions a subreddit for "scams" which, while not directly about deepfakes, highlights the importance of educational resources for people to "educate themselves, find support, and discover" solutions to digital harms. This principle applies equally to the fight against deepfake pornography.The Future of AI: A Call for Responsibility and Ethical Innovation

The existence of applications like **Clothoff** serves as a stark reminder of the ethical imperative in AI development. While AI offers immense potential for good, its power can also be wielded for profound harm if not guided by strong ethical principles and robust regulation. The "Data Kalimat" mentions "muah ai" as an alternative that is "absolutely free plus caters an unbeatable speed in photo generation." This highlights that ethical AI image generation *is* possible and can be beneficial, contrasting sharply with the malicious intent of Clothoff. Moving forward, several key areas require urgent attention: * **Ethical AI Development:** Developers and researchers must prioritize ethical considerations from the outset, embedding safeguards against misuse into AI models. This includes developing "red-teaming" approaches to identify potential malicious applications before deployment. * **Stronger Regulation and Enforcement:** Governments worldwide need to enact and enforce comprehensive laws that criminalize the creation and dissemination of non-consensual deepfakes. International cooperation is crucial to address the cross-border nature of this issue. * **Platform Accountability:** Online platforms must take greater responsibility for the content hosted on their sites. This includes proactive content moderation, swift removal of illegal material, and transparent reporting mechanisms. * **Public Education and Awareness:** Educating the public about the dangers of deepfakes, how to identify them, and how to report them is essential. This includes fostering critical digital literacy skills from a young age. * **Technological Countermeasures:** Research into deepfake detection technologies and digital watermarking for authentic content can help combat the spread of fabricated media. The discussion around **Clothoff** and similar apps is not just about technology; it's about human dignity, privacy, and safety in the digital age. It's about ensuring that the future of AI is built on a foundation of responsibility, not exploitation.Conclusion

The rise of **Clothoff** and other deepfake "undressing" applications represents a critical juncture in the ethical development and deployment of artificial intelligence. As we've explored, these tools facilitate the creation of non-consensual intimate imagery, inflicting profound psychological harm on victims and eroding trust in digital media. The alarming scale of Clothoff's monthly visits and the existence of dedicated sharing communities underscore the urgent need for a collective response from technologists, lawmakers, platforms, and the public. While the promise of AI is vast, its potential for misuse, as exemplified by **Clothoff**, demands vigilance and proactive measures. It is imperative that we prioritize consent, privacy, and ethical considerations in every facet of AI development. The future of AI should empower and uplift, not exploit and violate. We must work together to create a digital environment where such malicious applications cannot thrive, ensuring that innovation serves humanity responsibly. **What are your thoughts on the ethical implications of AI "undressing" apps like Clothoff? Share your perspective in the comments below, and help us raise awareness about the critical need for responsible AI development and digital literacy. If you found this article informative, please consider sharing it to educate others about these pressing issues.**

Detail Author:

- Name : Allene Pacocha I

- Username : beatty.ian

- Email : zorn@hotmail.com

- Birthdate : 1990-11-28

- Address : 17474 Rosemarie Parks Suite 657 South Franciscochester, UT 85087-6821

- Phone : (951) 272-1838

- Company : Brakus-Nitzsche

- Job : Air Traffic Controller

- Bio : In aliquam quas aut quas. Perferendis dolor voluptatem cum beatae architecto. Fuga facere dolor laboriosam sed perspiciatis velit. Eos atque excepturi fugit et consequatur accusantium libero.

Socials

facebook:

- url : https://facebook.com/ebraun

- username : ebraun

- bio : Voluptatibus modi repellat est provident porro ut et quasi.

- followers : 589

- following : 2822

linkedin:

- url : https://linkedin.com/in/ebraun

- username : ebraun

- bio : Maxime rerum quia qui ut.

- followers : 5968

- following : 2803

tiktok:

- url : https://tiktok.com/@emmett9240

- username : emmett9240

- bio : Vero nostrum suscipit et voluptatem omnis.

- followers : 2348

- following : 80

twitter:

- url : https://twitter.com/emmett_dev

- username : emmett_dev

- bio : Ut voluptatum doloremque voluptas occaecati aliquam. Non placeat in temporibus amet quas. Veritatis consequatur at similique et sed ut.

- followers : 3426

- following : 112

instagram:

- url : https://instagram.com/ebraun

- username : ebraun

- bio : Officia expedita ad laboriosam et. Laboriosam officiis aliquam et est culpa quibusdam.

- followers : 6426

- following : 2915